Chatbots: Facebook Messenger as the next step towards the future

Captain Kirk: "Computer, go to sensor probe. Any unusual readings?” Enterprise computer: "No decipherable reading on females. However, unusual reading on male board members. Detecting high respiration patterns, perspiration rates up, heartbeat rapid, blood pressure higher than normal.“

Already more than 50 years ago we have dreamed of an interpersonal communication with computer systems. In the mid-1960s, Star Trek creator Gene Roddenberry let the Enterprise team communicate with a computer through a kind of voice interface, realizing his idea of interacting with an artificial intelligence at least on screen. By doing so, Roddenberry was far ahead of his time.

In reality, the human-machine interaction initially happened on pure code level. MS DOS was the first Microsoft operating system to introduce text-based input in the late 1980s. In 1990, Windows 3.1 made the point-and-click interaction presentable. With the widespread use of smartphones and tablets, the age of touch input began. And in 2011 Apple presented its "Speech Interpretation and Recognition Interface" or in short: Siri. Other language assistants such as Cortana, Google Now and Amazon Alexa followed - all technologies that take up Roddenberry’s originally fictitious idea and supersede previous user interfaces thanks to artificial intelligence.

Voice services, however, are not the only new means of communication that make the issue of artificial intelligence (AI) more relevant than ever. The topic of chatbots is currently celebrating a particularly strong media presence. Whether "Der Bote" as debt collector (a chatbot of the German bank Sparkasse), Mildred, the airfare search engine, or Poncho, the weatherbot (see below picture) – chatbots have a widely spread application area.

Bots already have a large field of application. Here you can see Poncho, the weatherbot

Interaction possibilities between user and messenger

A common feature for many chatbots is Facebook. With its integrated messenger, the social network provides a platform that allows bot developers to write programs based on the Messenger API, the application programming interface.

This provides several advantages. One of them is certainly that the developer does not have to completely start from the beginning. The core, quasi the brain, is already there. It is up to the developer to decide how and with which information he responds to the API.

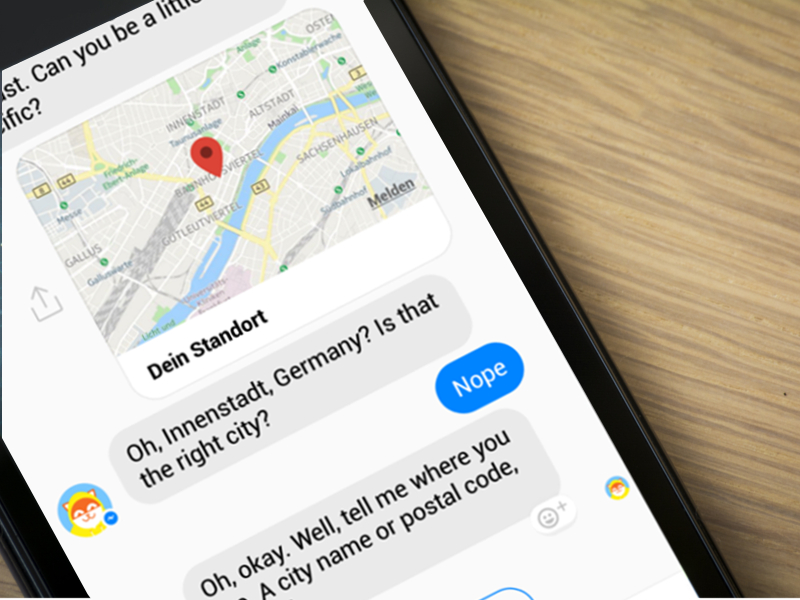

Users are accustomed to certain program sequences and usability standards by Windows, Android and Co. This is why Facebook also tries to standardize the look and feel of the messenger with a fairly manageable number of interaction options, such as already existing templates, different content types, buttons and quick replies.

The API documentation describes five different content types (text, audio, file, image and video), eight different structured response types (button, generic, list, receipt and four templates specifically tailored to flight information), seven different types of buttons (URL, postback, call, share, buy, log in and log out), as well as different options for quick replies, which provide a kind of pre-fabricated, short response.

After first connecting the webhook, which authenticates the communication between the Facebook Messenger API and the botserver, the user can communicate with the bot by means of the usual chat window.

Facebook recommends the use of JavaScript for bot creation. More specifically, the framework interpreter Node.js. The JavaScript runtime environment is widely used as a developer tool and allows for a resource-saving communication between client and server. This is a huge advantage since it allows a large number of simultaneously existing network connections and thus the response speed can be increased.

The typical bot-user-interaction sequence goes like this: The user is greeted and is presented with a first choice of response options; the user selects one of them; the bot responds and redirects the user to the next query to which the user responds, etc. This "rule-based" interaction between the user and bot reflects the current standard. The user is guided by a decision tree, in which he can choose between more or fewer branches. The user input itself can be one of the response types already mentioned.

Fun Fact: A user input, e.g. pressing a button, is the same for the bot as if the user had typed the text of the button into the chat window. Therefore, most of the answer options are usually only an optical alternative to manual text input.

The conversation is logged in the database of the previously configured server, not only to be able to evaluate the chat history later on, but also to save the user in certain states. This is where MongoDB comes in handy. It presents database contents similar to JavaScript as objects, and not table-indexed like it is with mySQL. We have already talked about the conclusions that can be drawn from this stored data in one of our previous blog posts on chatbots.

Natural Learning Processor systems as the next step towards Holodeck

As you can see, chatbots currently still have nothing to do with actual artificial intelligence since they work with answer templates provided by the developer. The extension of this "rule-based" bot is the AI-supported bot. The Messenger API is expanded with an NLP (Natural Learning Processor) system. An NLP analyzes sentence fragments and creates logical links using user rules to independently make progress this way. In our everyday life, however, these AI chatbots still have no significant value.

Whether with or without AI, one thing is important: The development of a chatbot does not only take place on the technical side. Because the bot as an interface between a company and users always embodies a brand as well, a lot of the developmental effort falls into the area of user experience. Because if the interaction with the bot feels too artificial and, moreover, does not provide any real benefit or entertainment value for the user, in the worst case the brand image may suffer. On the other hand, a well-designed and technically prepared bot can positively reinforce the perception of a brand.

Conclusion: It will still take some time until humans can really "freely" communicate with a computer. Voice recognition and bot interaction are currently still subject to strict rules, which can simulate a kind of artificial intelligence, but do not yet make a real understanding and a coherent further development possible. However, this will change within the next few years and then perhaps it won’t take too much longer till the Holodeck.